Admin

Latest posts by Admin (see all)

- The SAN Storage Trinity - October 13, 2017

Your Blackberry/iPhone flashes up a message from your staff: ‘The SAN’s down..water leakage from ducting in ceiling’

Your Blackberry/iPhone flashes up a message from your staff: ‘The SAN’s down..water leakage from ducting in ceiling’

This is the single moment every IT Manager dreads.

Sure – the SAN has redundant Controllers, Dual Power Supplies and was RAIDed with all the options of RAID 10, RAID 6, RAID 50 and RAID 60, but it is still a single chassis, sitting at the bottom of one of your racks:

Your ESX Virtual Machines are fine – you planned ahead and Clustered your ESX Servers and have the Fail-Over Server mounted two racks down from the leak.

And you have plenty of redundant switches, and AD/DNS Servers.

What you don’t have is a Storage Design that cost effectively gives you Secondary Redundant Storage, in a physically separate HA arrangement.

At the time of your SAN purchase 2 years ago, spending an extra £80,000 (plus) for a second SAN with software options for mirroring, replication, snapshots and fail-over, was not a financial option.

So – How often would the above situation occur: My own observation from our clients is you have a 50% chance of a SAN shut-down at least once in a 5 year period. Water leaking from over-head piping is the #1 cause. Electrical failure and short circuits have also taken-out entire SAN chassis’s.

Loss

Once your SAN has failed, the loss to your business and reputation is something only you can calculate. What I can add is that even if a ‘new’ SAN could be delivered to your door step the next morning, the effort taken to:

- recover your data

- re-start your systems

- and then re-work / re-enter missing data or repair corrupted systems

will be measured in the order of Hundreds of hours for the IT Department and Business staff and it’s likely that 3-4 weeks later you will still be involved in issues involving missing data.

Designing for SAN Failure

There’s only one way around this:

- Physically separated Storage with Synchronous Mirroring

- With the Essential Element – The ability to ‘roll back’ each of the SAN nodes to past points in time

And by physically separated I mean:

- If you have your own computer room; try and get a few metres of separation

- have a look in the ceiling and see where the ducting and pipes run

- or as one client found, they were able reciprocate with another tenant in their office complex and cross host a rack for each other

Sure you say: there’s no way my manager is splashing (no pun intended) out ‘6 figures’ to implement this type of system.

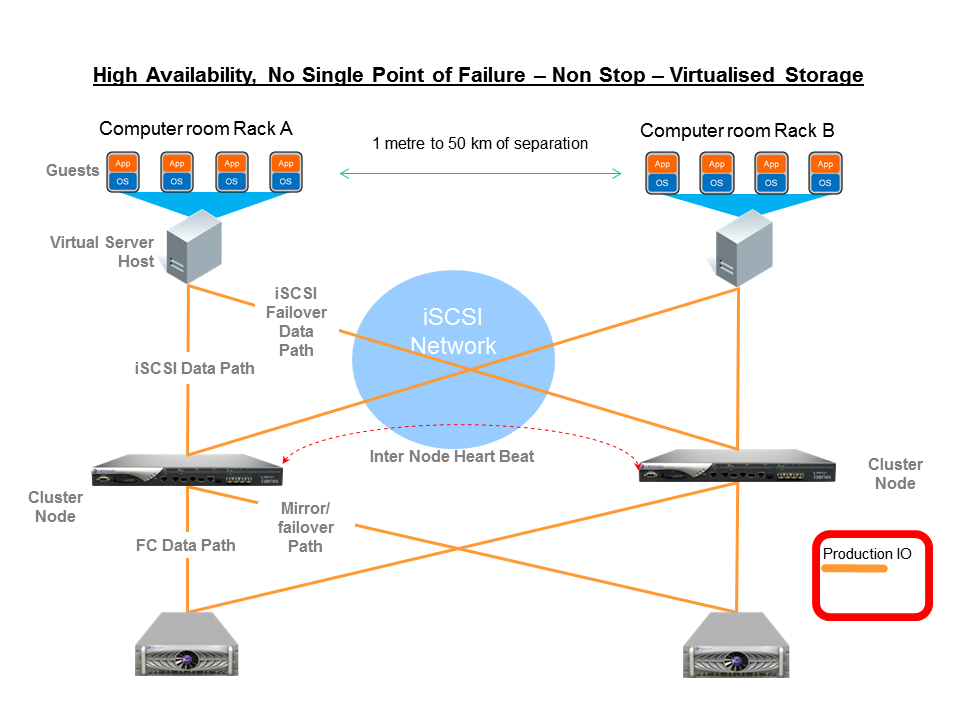

High Availability SANS

But there’s no need to spend anywhere near this figure.

A high Performance, Physically Separated Storage Systems, with Synchronous Mirroring and Snapshot capability can be implemented for under £10,000, that will deliver significant performance for a ‘few’ TB of Data – i.e. enough data to protect your critical billing systems and Exchange

In this arena, solutions from DataCore, LeftHand (now HP) and NexSan have allowed people to start small and scale out their own ‘HA’ storage – essentially by placing the most critical ‘3TB’ of application data within the Physically separated SAN infrastructure and further down the line, as budget allows, adding more capacity to allow the remaining data and systems to have HA storage.

Dis-Similar Storage

You potentially also have half of the investment needed to create a High Availability Non Stop Storage SAN – that is, your existing SAN.

Solutions such as NexSan’s Clustering technology and DataCore SanSymphony, SanMelody work with:

- Dis-similar Storage arrays on each side of the ‘Cluster Node’

- In Fact, they can combine and ‘join together’ dis-similar Storage arrays behind a single Cluster node to create a large virtual pool of Storage

So Its quite feasible to have a HDS array and a Dell MD3000 array ‘pooled’ together behind Cluster Node 1 and sitting a few racks away, the ‘mirror’ partner is a Dell EqualLogic array.

Your Storage Design and Strategy is now vendor independent.

Synchronous vs Asynchronous

True non-stop High Availability SAN Pairs (i.e. milliseconds to just seconds of outage) in essence needs Synchronous mirroring between the cluster nodes.

This implies a maximum distance between the two points of separation, as well as ‘Latency’ considerations – with Latency being the round trip travel time for a ‘data packet’ to travel between the cluster nodes.

Synchronous means our Secondary Cluster Node and Storage are an EXACT true real time mirror image of our primary storage. Every Disk Write is committed to the Secondary/Remote Node first, before being committed to the Primary node, with lots of complex ‘hand shaking’ to ensure these commits occur. This way, Applications such as Exchange wont miss a beat – They in essence have no idea the Primary Storage Node has failed or that they are now being serviced by the secondary node.

Synchronous works fine across 1Gbps copper links, 4Gbps Fibre Channel, and in some cases, where clients are all 1Gbps copper, I will put in a dedicated 10Gbps copper link to act as the ‘mirror highway’ between separated racks in the one computer room. (No need for 10Gig switches here as Point to Point with Cross over cables is infinitely more cost effective).

Once distance starts to stretch further than a Kilometre or so, a Fibre connection becomes the most cost effective means of creating the mirror connection. Some of the manufacturing and education clients we deal with, have large factory and campus sites and often have a fibre ring in place already.

Without low latency or with Slow links between two sites, we are forced to use Asynchronous Replication. This inherently means data on the ‘Secondary’ cluster node will ‘lag behind’ what is being written to the Primary Cluster node, either by 10’s of milliseconds, a few seconds or possibly minutes.

It doesn’t really matter what the lag time is – The Secondary Node is not in a state able to seamlessly take over the running of your Database Applications such as Exchange, SQL and Oracle. Logs, Indexes, Queues and Databases will potentially all be in differing ‘time states’, resulting in the Applications reporting the need for integrity checks.

We will cover Asynchronous Replication in another Blog entry – as it is the ideal form of replication for True Disaster Recovery – but Asynchronous always implies ‘minutes to hours’ of outage.

Summary

Small to Medium Sized Business often run with small IT teams – and it might be many months or many years since you’ve practised handling a SAN disaster.

At Hundreds of thousands of pounds, it’s understandable that ‘Secondary non-stop’ storage was just not affordable – but that’s just not the case now. Using some of the technologies mentioned, a Secondary High Availability node can be incorporated into your existing infrastructure, using your existing (and dis-similar) SAN hardware.

The technical complexity to achieve true HA is very low – some of the systems and architecture’s I have mentioned within are installed in small High School scenarios with 1TB of data and 2 IT staff, as well as 100TB+ scenarios with 30-40 IT staff.

If you can ‘stretch’ just a few racks away in your computer room, that’s a start. If you can ‘stretch a few hundred metres and also locate some switches and other infrastructure in a ‘Secondary’ rack, then you will have near Enterprise Class Resilience at a figure probably 1/4 to 1/5th what you had previously considered.